Executing the test Plan

Once a test plan has been created, you can proceed to execute it and evaluate the agent's performance. The execution process allows you to run the test cases against a specific version of the agent and analyze the outcomes.

Starting the Execution:

- In the Test Plan grid, locate the test plan you wish to execute.

- In the action column, click on "Test Execution". You will be taken to a screen displaying a grid with all previous executions of the selected test plan.

- To initiate a new execution, press the "New" button. This will allow you to define execution parameters for the current agent version.

Configuring execution parameters

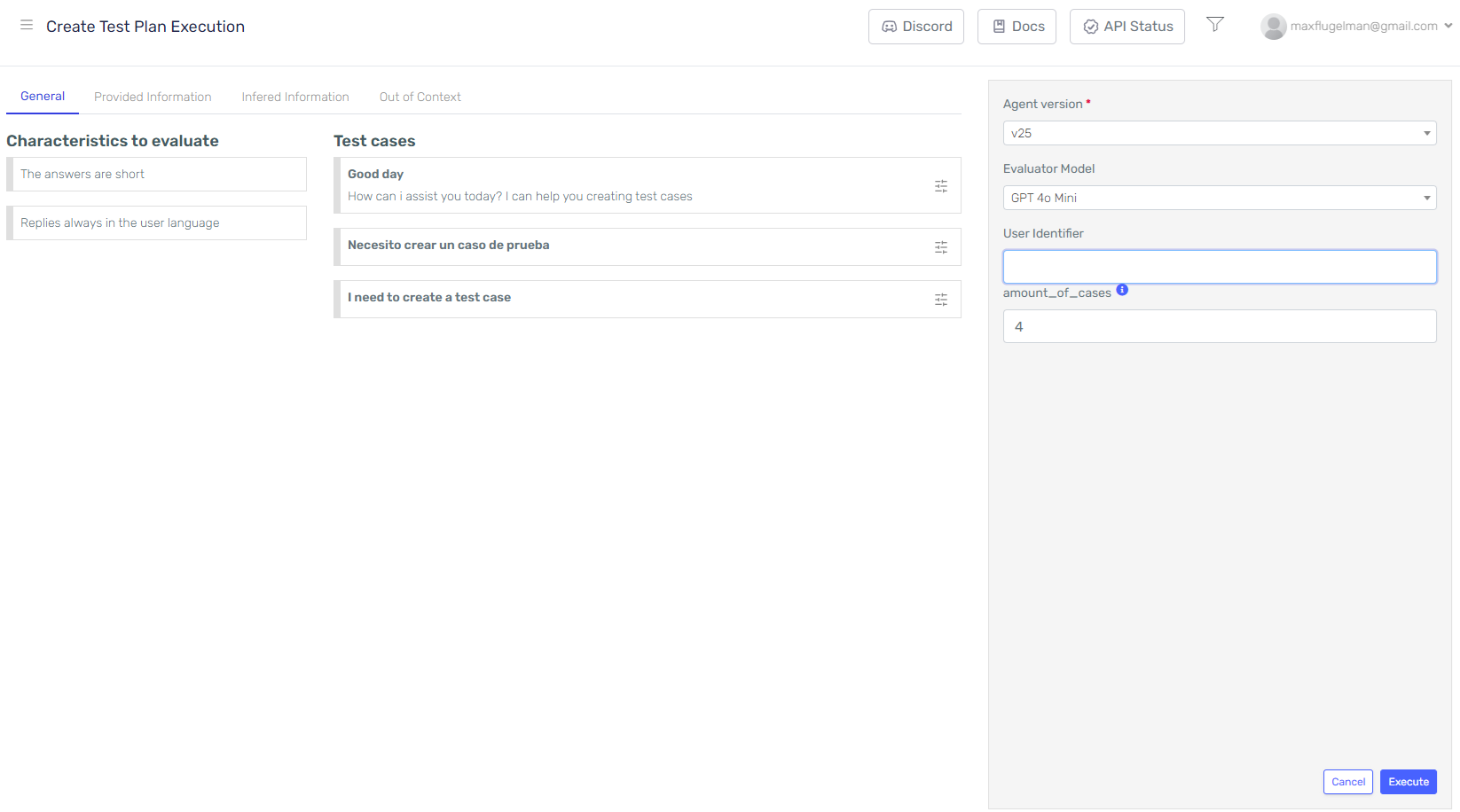

In the New Execution screen, you will need to configure the following parameters:

-

Agent Version: Select the version of the agent that you want to test. This ensures that the test plan is executed against the specific version allowing for testing before the agent version is published.

-

Evaluator Model: Choose the evaluator model, which is the AI Model responsible of making the final assessments. The evaluator model will analyze the agent’s responses and determine whether they meet the defined expectations.

-

Input Parameters and required Data: If the agent version has input parameters or required user identifier you can configure it before the test execution. This parameters will be used as default for every test case.

-

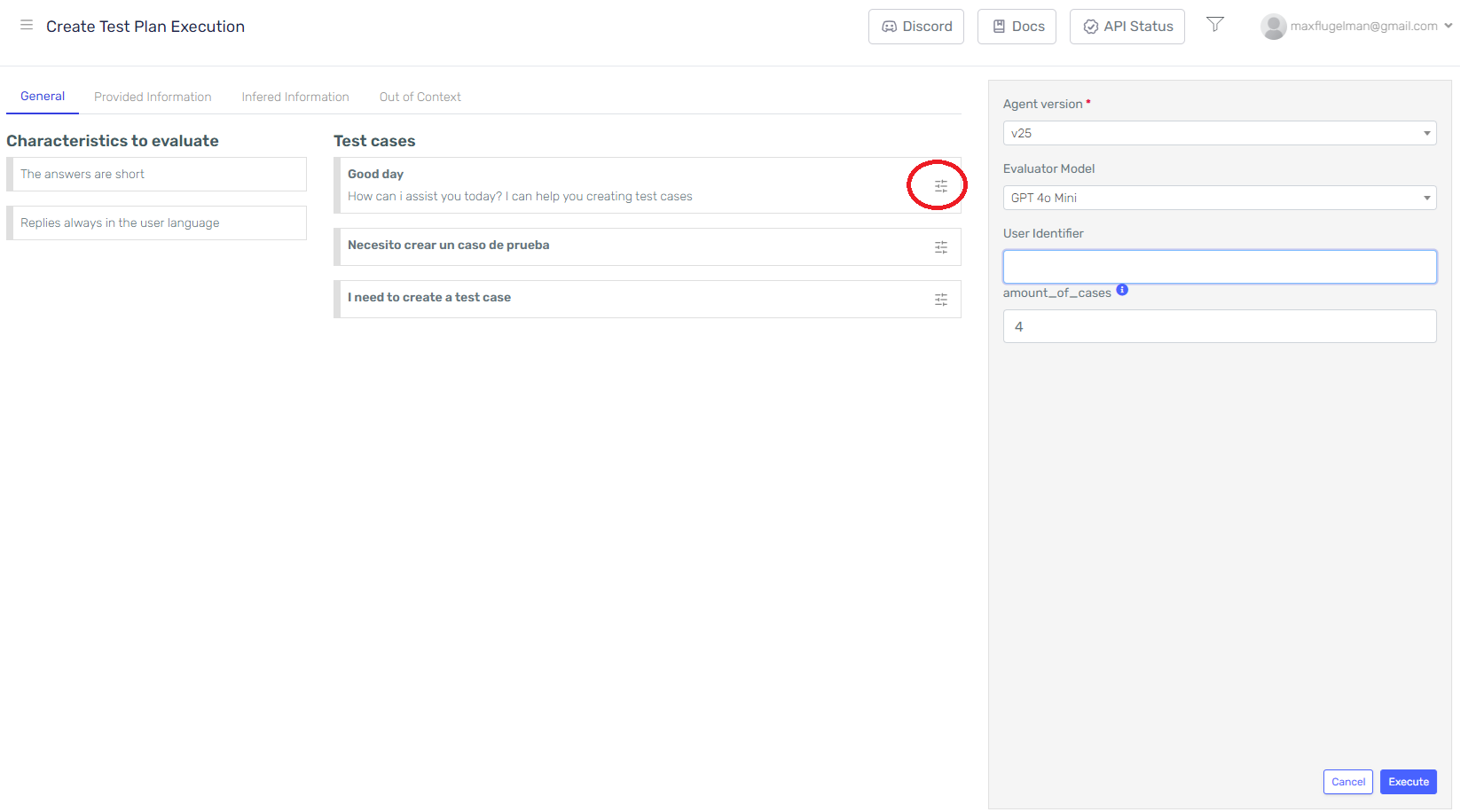

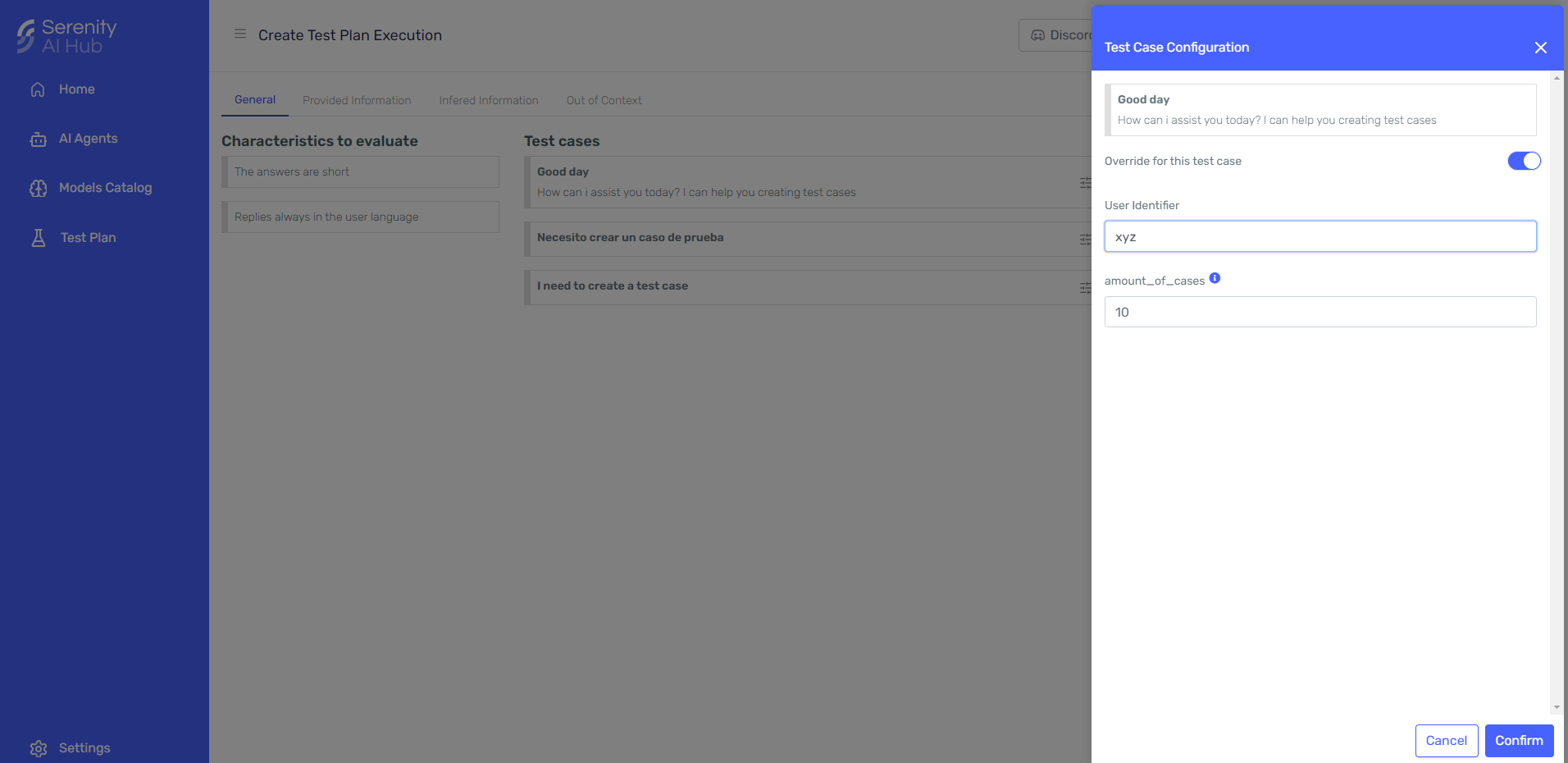

Overriding input parameters Any test case can override the default input parameters, to achieve this, press on the configuration button on the test case you want to override and a side panel will open where you can configure specific parameters for that test case.

Select a test case to override

Select a test case to override Configure the override properties for this test case

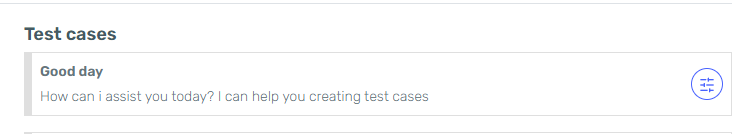

Configure the override properties for this test case Test cases that have parameters overriden are marked

Test cases that have parameters overriden are marked

Sending the test case for evaluation

After configuring the execution parameters, you are ready to run the test cases.

Executing the Test:

Press the "Execute" button to initiate the test execution. The system will follow a series of steps to evaluate the agent’s performance and provide detailed results. This process is done in the background and results will be processed in batches.

Test Execution Steps:

- Agent Response: Each test case will be executed against the selected version of the agent, and the agent’s response will be registered.

- Evaluation: The evaluator model will then assess each test case, analyzing the agent’s response based on the predefined characteristics for that dimension. The evaluation process will:

- Compare the agent’s response to the expected result. -Evaluate the response based on the dimension (e.g., General, Provided Information, Inferred Information, Out of Context). -Provide a score or result based on how well the agent’s response aligns with the characteristics and expected outcome.

- Results: Once the evaluation is complete, you will be presented with the results, including scores for each dimension and characteristic. This allows you to identify areas where the agent performed well and areas that may need improvement.